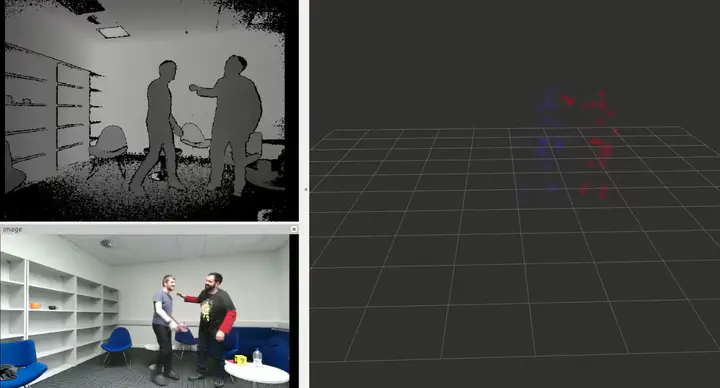

Screenshot of the training environment.

Screenshot of the training environment.The dataset is composed of 20 long RGB-D videos. Each video provides RGB-D images and tracked skeleton of different social and individual activities performed by two people. Each session is in a separate folder, which contains compressed full HD RGB and depth images, and two files for the skeletons of the two actors in the scene. Each row of the skeleton text file contains information about positions (6 DoF) of 25 joints. Each video provides a labelling file containing the time intervals of each social activity in the video.

@Article{Coppola2019,

Title = {Social Activity Recognition on Continuous RGB-D Video Sequences},

Author = {C. Coppola and S. Cosar and D. Faria and N. Bellotto},

Journal = {International Journal of Social Robotics},

Year = {2019},

Doi = {https://doi.org/10.1007/s12369-019-00541-y}

}