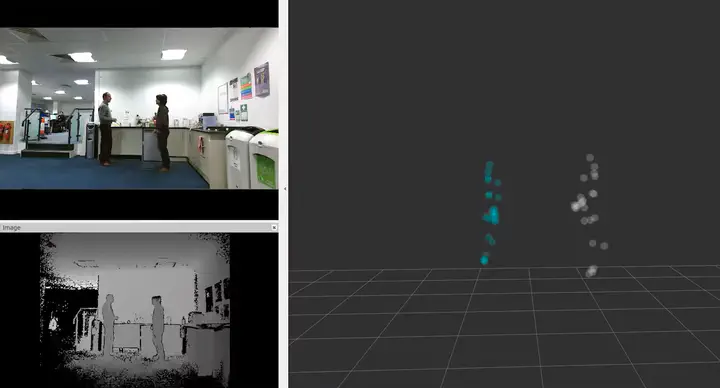

Screenshot of the training environment.

Screenshot of the training environment.The dataset is composed of 10 sessions. Each session provides RGB-D images and skeleton tracks of 2 long videos of different activities performed by two people. Each session is zipped in a separate file, which contains a folder that has skeleton tracks in a text format and RGB (24 bits) and depth images. Each row of the skeleton text file contains information about positions (6 DoF) of 25 joints.

@InProceedings{Coppola2017,

Title = {Automatic Detection of Human Interactions from RGB-D Data for Social Activity Classification},

Author = {C. Coppola and S. Cosar and D. Faria and N. Bellotto},

Booktitle = {IEEE Int. Symposium on Robot and Human Interactive Communication (RO-MAN)},

Year = {2017},

Pages = {871-876}

}